Recently there has been a lot of commotion around an emerging technology called Deepfake. This artificial technology based on deep learning allows the manipulation of content up to concerning levels or realism. However, aside from all the social-political threats, what really is out there currently?

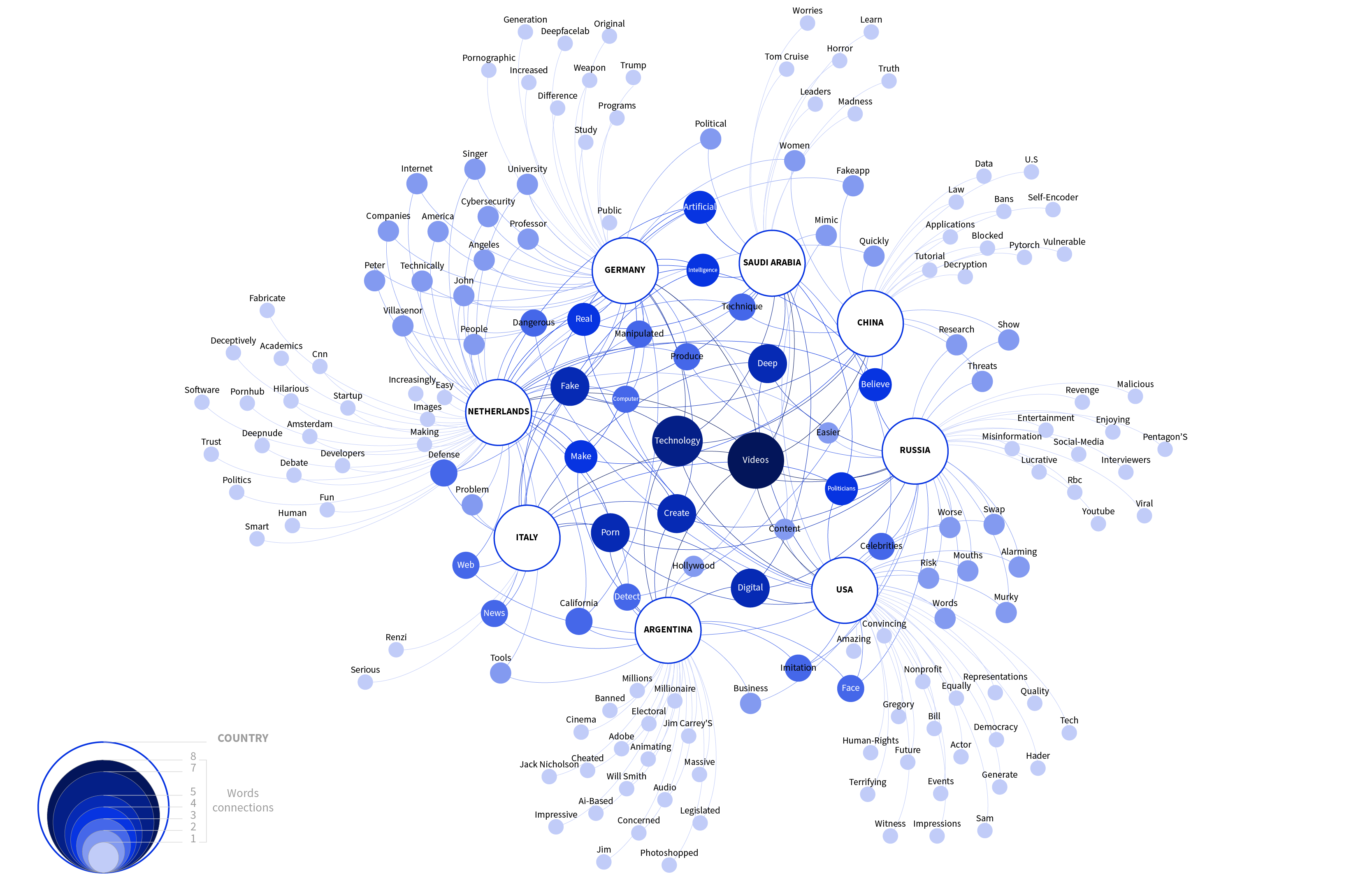

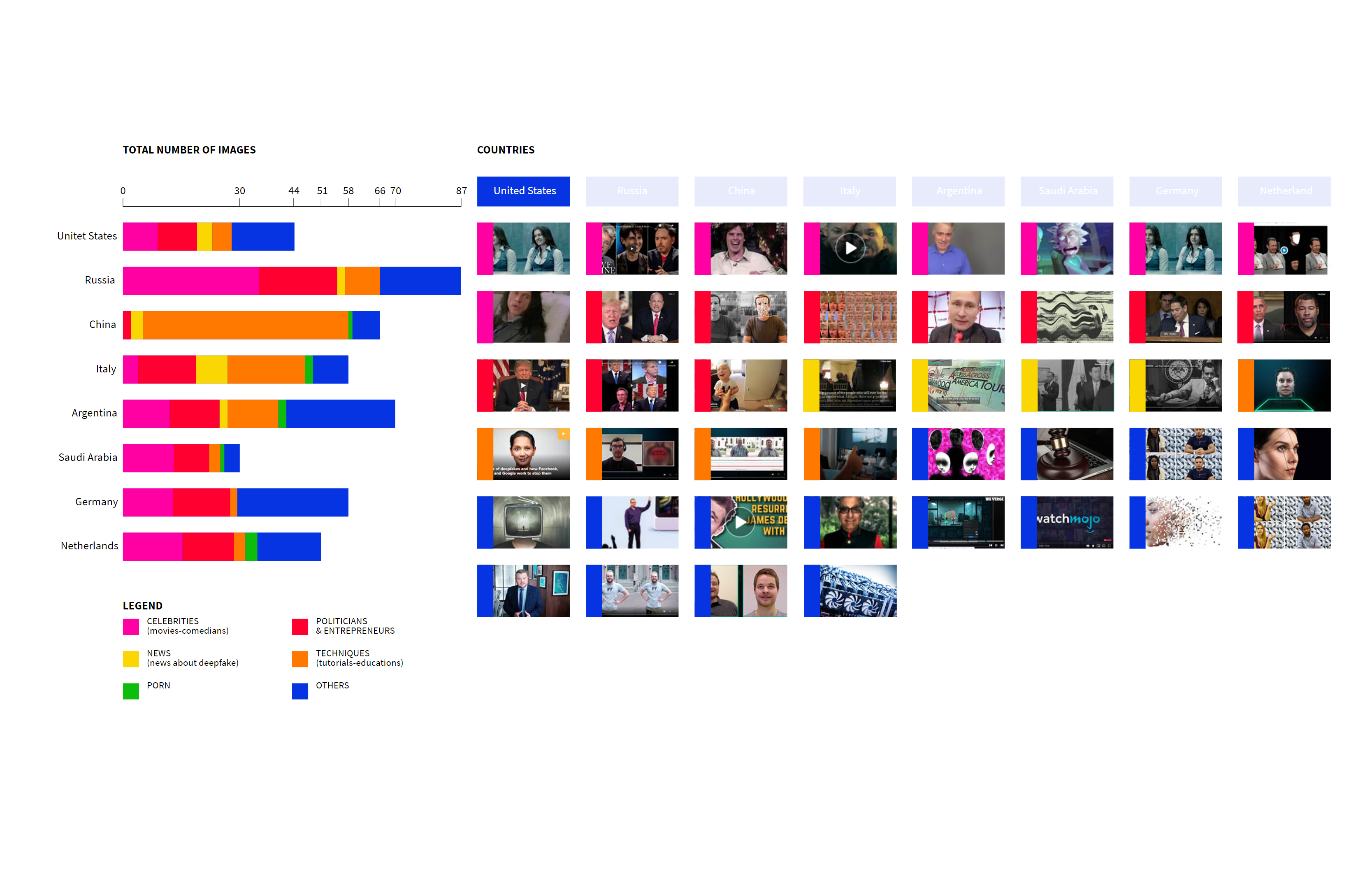

This research digs into different platforms to explore the current situation around Deepfake. The first protocol introduces the topic by studying the differences in the vocabulary used to describe the phenomenon. One can see that the general language among top search results carries concern, but does the internet’s Deepfake content reflect this worried message brought to the public? Will the oblivious internet wanderer come across critical content, or does this darkly portrayed technology have an entertaining side as well?

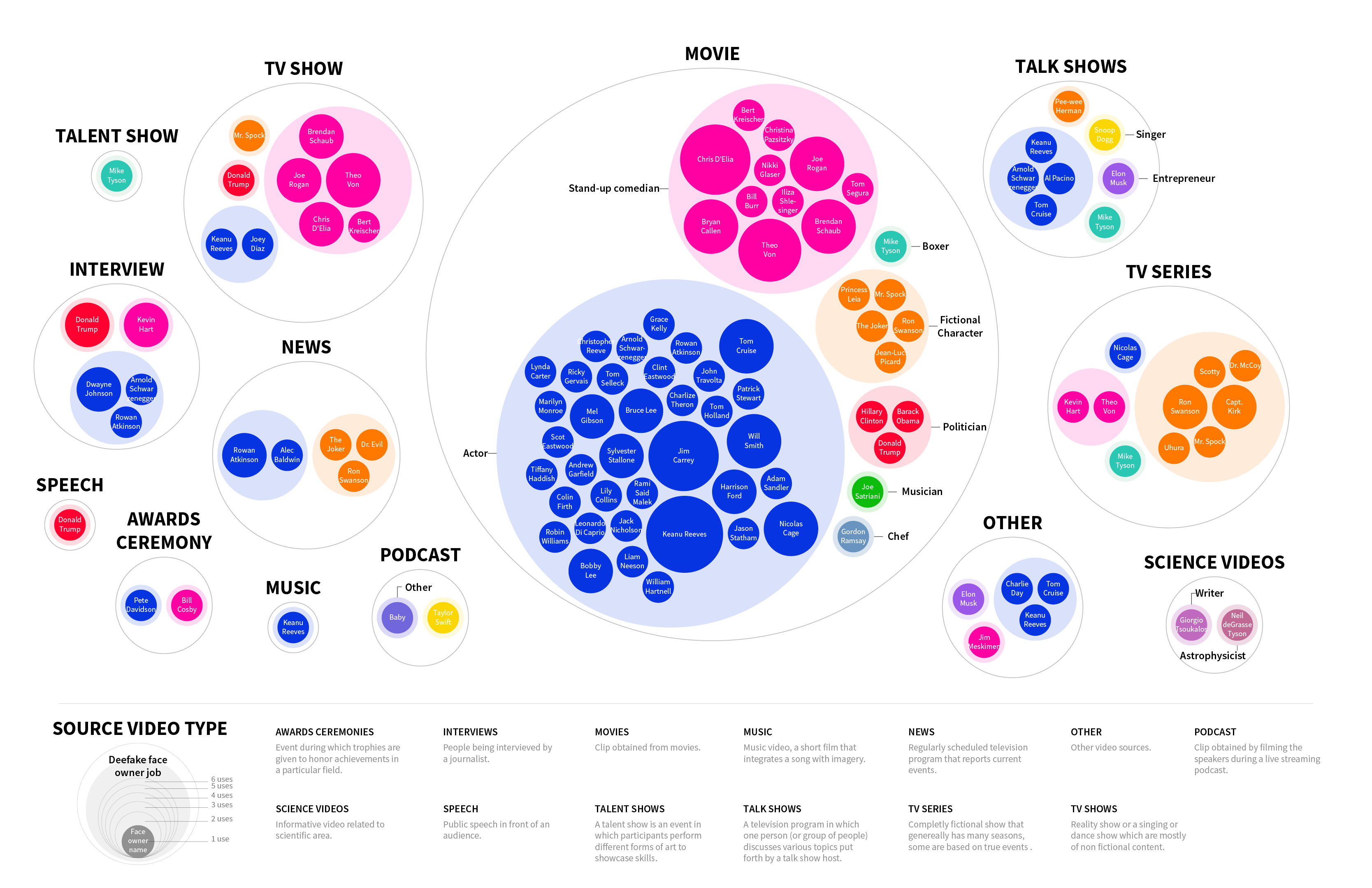

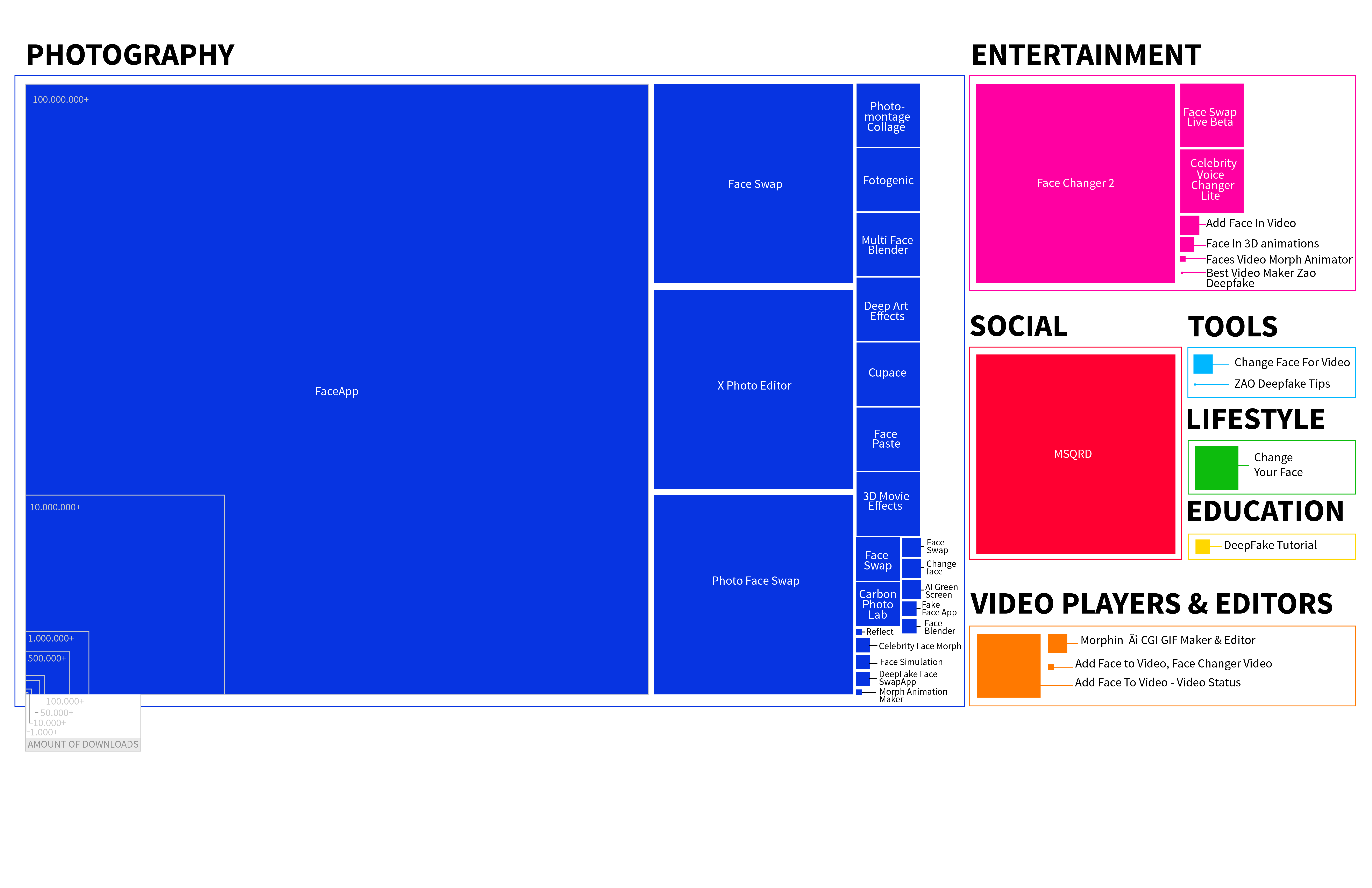

From this research it has become evident that the tools to create a Deepfake are accessible to anyone, ranging from simple applications to complex programming. A study of YouTube confirmed that there is a community that creates “amusing” variations of legendary video content using Deepfake. The news about the sci-fi dangers of this technology seems rather at a distance whilst its innocence is close within reach. Aren’t these characteristics of the most dangerous threats? Content manipulation is at its most powerful when it is not recognised, and a vast amount of badly executed examples convince one that this is still easy.